Practical AI for Historians (and the Arts): Part I

Use AI to transcribe and correct handwritten historical documents

Most of the conversation around generative AI has been focused on its potential for existential disruption. But if you aren’t immersed in the technology, it might seem hard to believe. For many of us, AI still feels removed from the day-to-day work of research and writing and it’s probably difficult to see what all the hype is about. Afterall, what can a chatbot offer a scholar?

In this next series of posts, I want to look at some of the practical things we can do with generative AI. As a historian, I am going to focus on things that I find useful in my own work, but much of this will be broadly applicable to other disciplines as well.

ChatGPT is Only One Example

First, though, set aside your preconceptions. Right now, its easy to fall into the trap of thinking that ChatGPT is the innovative technology that’s changing everything, but it’s not. The really revolutionary thing is actually the Large Language Model (LLM) behind the curtain.

As I’ve written about before, LLMs use natural language processing and pattern recognition in ways that even their creators don’t fully understand. They do this according to set instructions and parameters that govern how they use their training data (billions of pages of text) as well as information supplied by the user, called “context.” Let’s set aside technical questions about how this actually works and focus on the key point: “chatting” with the AI is only one possible application of the underlying LLM technology. You can use these models to do a whole range of other tasks, many of which are both routine and monotonous.

Get AI to Transcribe a Handwritten Document

One of the most common and time-consuming tasks for historians is transcribing handwritten historical documents. To speed up the process, you can use an AI tool called Transkribus and then use ChatGPT to correct errors in the transcription. For clarity, GPT-4 will likely soon be able to do all this “in-house” as it has some amazing new visualization capabilities that haven’t been fully released yet. But for the moment, Transkribus is the place to start.

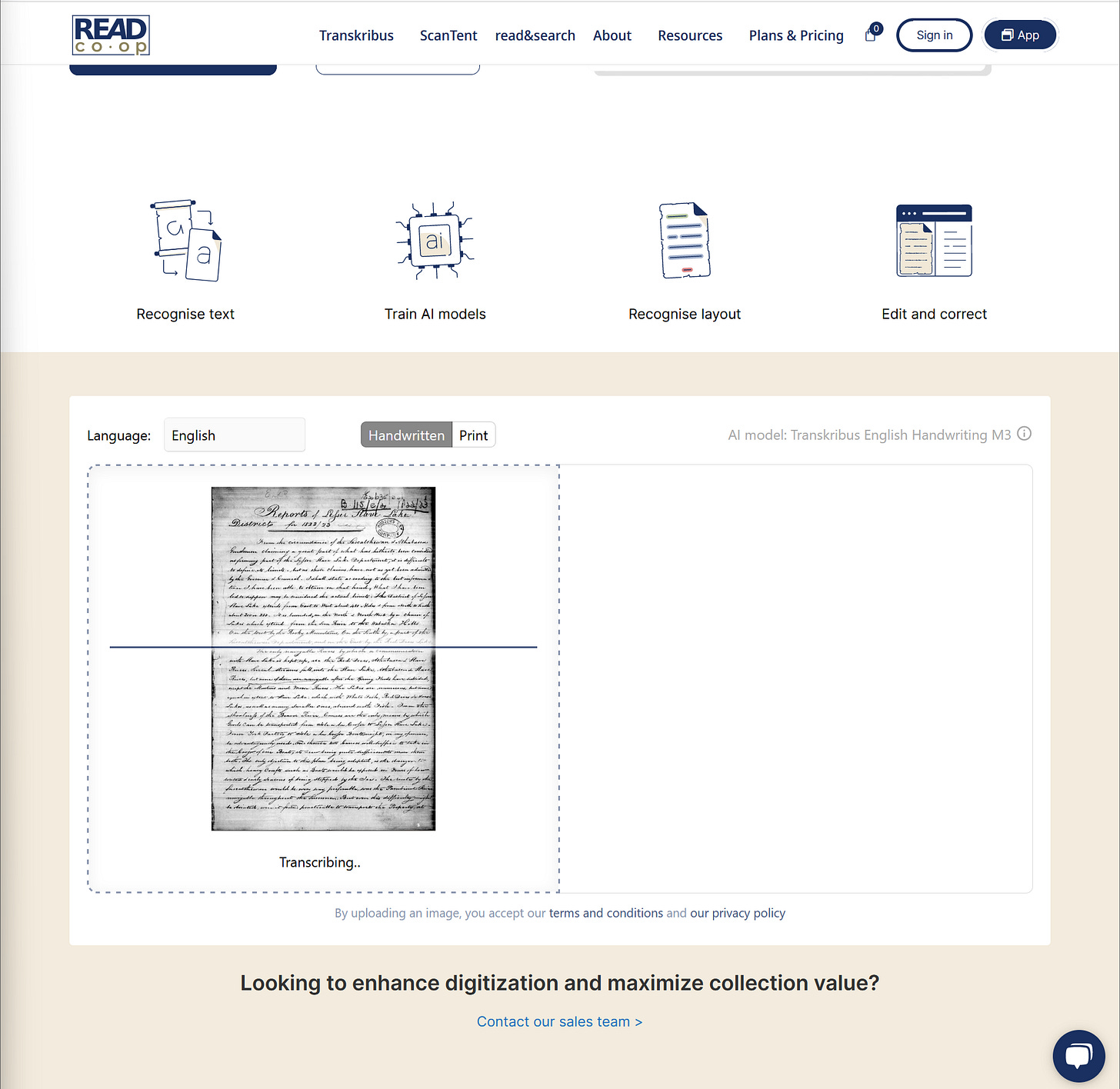

Transkribus is a web-based program that was developed by “a consortium of leading research groups from all over Europe,” based at the University of Innsbruck. It uses machine learning to transcribe both print (especially older typefaces and fraktur scripts) as well as handwriting to printed text. Transkribus offers a free trial (500 pages of free transcription to start); additional credits can be purchased on a subscription basis thereafter. To try it out, you can also do one or two pages on the website without signing up, as I’ll show you below.

Transkribus does very well on its own with high-res photographs of documents using a built-in English handwriting model their team developed. With a subscription, you can also fine-tune your own model if you have a large volume of text to make transcriptions more accurate. But very often we have less than perfect documents to work with, perhaps from microfilm. These can still be read by Transkribus, but they also tend to generate a lot of errors that make the text look a bit like gibberish.

As an example, I intentionally choose a poorly photographed, microfilmed page from the Hudson Bay Company’s 1823-23 annual report for the Lesser Slave Lake District, pictured above. I then went to the Transkribus website, selected “English” from the drop-down menu, and dragged and dropped a JPEG of the document into the website. It transcribes the document in a few seconds.

Correct the Errors with ChatGPT

Given that my scan was taken from a low-res image of a poor quality microfilm, the resulting transcription is actually pretty good. Nevertheless, there are a lot of errors and it’s hard to read. To quickly correct the errors, we can use the pattern recognition and predictive capabilities of the GPT models to generate a cleaner text. In this example, I’ll be using GPT-4 (currently only available behind a wait-listed paywall), but the free GPT 3.5 Turbo does a pretty good job too.

First, we need to give ChatGPT a clear prompt, explaining what we want it to do and why. Prompting is one of the most important aspects of working with AI. In effect, it is a form of natural language programming. While you can certainly ask ChatGPT a basic question, when you provide it with more information about what you want it to do—as well as relevant documents and other text to work with—you will almost always get better results. I’ll focus on “prompt engineering” in a later post.

In this case I prompted ChatGPT’s GPT-4 model as follows:

Here I tried to construct a clear, concise prompt. I told ChatGPT what I was asking it to do and why, explaining that I would provide a document that I wanted it to transcribe. I also told it not to alter things like word order, syntax, grammar and spelling—essentially asking it to leave the text “as is” except for the OCR errors. This is critical because otherwise it would attempt to correct a whole range of things that we don’t want it to touch, like style and grammar. Critically, I phrased my directions in a positive, affirmative way: I told it to maintain the wording rather than issuing a command that it not change the word order. For a variety of very technical reasons, this positive approach generally works better with generative AI.

After hitting enter and watching the response roll in, the resulting text (see below) is far more readable! Critically, I also asked the model to enclose changes in square brackets so I can quickly check transcriptions for errors which still happen.

Interestingly, in most cases, when an OCRed word is truly unreadable the AI will pick an appropriate word based on the surrounding context, sometimes resulting in a choice that’s close in meaning but not a direct transcription. For example, the second sentence should read: “I shall state according to the best information I have been able to obtain on that head, what I have been led to suppose may be considered the actual limits.” However, in the original the word “head” was OCRed as “herrby” and GPT-4 predicted the intended meaning, substituting the word “subject.” Not bad, but not exactly right. If you are transcribing documents in order to speed up the process of reading through a large corpus , this is not normally a big issue. But if you plan to actually use a text, proof-reading is essential—much the same way as I would proof-read any transcriptions completed by a research assistant before citing them or quoting from them.

You need to be especially careful with proper nouns, specifically people’s names. They are both less likely to be correct in the OCRed text and less likely to be fixed by ChatGPT. Interestingly, in my experience human research assistants also struggle the most with people’s names and places—as well as obscure words and phrases. For both human students and AI, this is because they are often the least predictable and least familiar aspects of a text.

When using ChatGPT in this way, you also need to double check numbers against the original because it will not know if they are correctly transcribed or not. For example, in the above text, the district is said to extend 280 miles from east to west and about 200 or 250 miles from north to south. However, the second set of numbers were OCRed incorrectly as “200 or 200 miles” and GPT-4 has no way of knowing that this is an OCR error.

In longer projects, you can solve a lot of these problems by providing the AI with customized dictionaries. For example, if you were correcting dozens of pages of fur trade post diaries, you might provide ChatGPT with a list of people’s names, places, and unusual words (for example, “porkeaters”) that are mentioned frequently in the text but are unlikely to figure prominently in its training data (which is important). You would do this as part of the prompt, by adding a phrase like: “In completing your corrections, refer to the dictionary below for a list of commonly use people, places, and unusual words.” Below this you would provide the lists, input your transcription as above, and hit enter. Prompting is an art—sometimes likened to alchemy and for good reason.

Conclusion

While the process I’ve outlined is useful for short documents, it would be time consuming if you wanted to do hundreds of pages. But by combining the Transkribus server and the GPT-4 API with a few lines of python code, you can actually automate most of the process, hitting a button and receiving an OCRed and corrected text of several hundred pages in just a few minutes. Granted, this extended version requires some degree of knowledge and comfort with coding, but learning a little bit of python might be worth it as it speeds up a slow, monotonous process enormously. Getting a text into a readable, digital form is also a precursor to many of the other types of data processing and analysis we’ll talk about here in future posts.

At the same time, correcting transcriptions is only one, very basic example of what ChatGPT and other generative AI can do for academic researchers. I choose it because it highlights very well how we can use GPT models to perform very specific tasks that speed up the research process, as distinct from the writing process. It also helps us see that ChatGPT and other forms of generative AI are merely the forward facing parts of much bigger, more powerful technologies.

At the moment, the question of how we’ll harness those technologies remains very much open. People are discovering new use cases for AI everyday. Unlike most of the computer technologies we’re used to, generative AI doesn’t come inside a neat package with preset limits on its functionality. It’s actually up to users to figure out what they can do with it. And for academics, the real promise of AI is not that it will write papers or books for us (far from it—and why would we want it to do that anyway?), but it will allow us to do many of the less enjoyable aspects of research, writing, and administration much faster.

That's great! I'm glad it was helpful!

I'm a surveyor with 44 years experience transcribing old handwritten deeds and just tried Transkribus. It's childish and completely worthless. By the time you create corrective applications you have invested much time with very poor results. If you're experienced, you can just read the documents and dictate them.